YUV is a format used to describe colour inside a pixel. Most of the times, that answer should be enough. But this post is for those rare times when that answer isn’t enough and needs a lot more than a single sentence.

Although, be warned that I cannot do justice to YUV by starting at YUV. I need to explain the story behind it. The story is as fascinating as the theory. So if you’re one of those guys who need a to-the-point explanation of YUV, I apologise right now. Since no human is perfect, and this is my first time writing a technical post, there will be errors. I would be glad to have them pointed out to me.

The Beginning

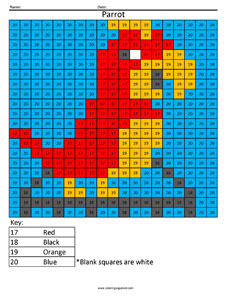

Let’s go hop on a time machine and go to when we were kids (assuming the ones who read this are actually adults). Do you remember those colouring books which you used to spend hours colouring and admiring? In those books, there used to be an exercise which contained a number of small squares arranged to form a large square or a rectangle. Each of those small squares would contain a number and there was a legend at the bottom where each number would be assigned a colour. Something like this:

Your task was to follow those colour code and colour each of the squares with their corresponding colour. In the end you’d be greeted with something cute (most of the time) like this:

Funnily enough, this is exactly how a display works. Any display, be it your smartphone, TV, laptops, desktops or even those huge billboards that play advertisements, uses this same principle for showing the video or an image. They use tiny squares and colour them to show you an image. String along a number of images one after the other and you now have a video. Just like a flip-book. The underlying principle is that easy. Anyway, those small coloured squares are known as pixels and they are the fundamental building block in an image (technically it’s atoms (technically-technically it’s quarks but let’s not go there shall we?) but let’s not go there shall we?).

A more formal definition would be: A pixel is the smallest discrete component of an image or a picture. (Where did I get this? Of course from Wikipedia. It’s not like I have a teacher standing right next to me saying he won’t accept my work because it’s been taken from Wikipedia.)

These pixels are also known as picture element or pel. You can make out these pixels on a large screen (like a monitor or TV) if you peer closely enough.

So let’s go to the next question: how are the colours stored and shown to us? There are many ways, but we’ll look at the two major ones: RGB and YUV.

RGB

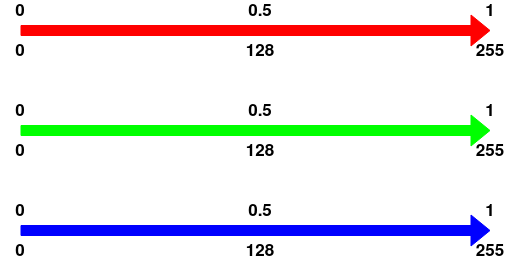

RGB stands for Red Green Blue. This is used by almost every digital image in the world. The theory is simple: Each pixel is described by 3 values (or numbers), which correspond to the value of red, green and blue respectively. Normally, the values range between 0 to 1.

For example, if a pixel contained the colour red, then there is no presence of blue or green components. So the values will be:

R = 1, G = 0, B = 0 or (R, G, B) = (1, 0, 0)

Similarly, for green, the values will be:

R = 0, G = 1, B = 0 or (R, G, B) = (0, 1, 0)

But what if the pixel contained black? Since black means the absence of any colour, all the values will be zero:

R = 0, G = 0, B = 0 or (R, G, B) = (0, 0, 0)

What about white? White means presence of all the colours, so all the values will be maximum:

R = 1, G = 1, B = 1 or (R, G, B) = (1, 1, 1)

Any composite colour? Let’s take purple for example. It contains some part of red and some part of blue, but no green. So the values are:

R = 0.5, G = 0, B = 0.5 or (R, G, B) = (0.5, 0, 0.5)

But since computers suck at decimal points (I can personally vouch for that), they usually map the values to [0, 255], i.e, 0 becomes 0 and 1 becomes 255 and any value in-between are mapped proportionally. From this mapping, we can get a total of 256 x 256 x 256 colours.

Using these values, we can start filling colours on the screen. In a typical LED screen, each pixel contains 3 LEDs (yeah those LED bulbs you use them at home, but these are way smaller). Those three LEDs are special: each of them can emit only one colour. They are red, green and blue respectively. Each of the values say to how bright the corresponding LED must be, i.e., a value of 0 means no brightness (turned off) and a value of 255 means maximum brightness. By using this, we can display all the colours required on the screen.

Infact, you can see these LEDs with a camera and a screen. Take a picture of your screen (monitor or laptop) with your smartphone with the camera as near as possible to the screen without losing clarity. If you zoom in on the image, you can easily make out the three LEDs, placed neatly in order.

Let’s try to calculate the space required to store a single image using RGB format. We need 1 byte of data to store values upto 255. Since we have 3 values per pixel, we’re gonna need 3 bytes of data per pixel. If we use a standard HD image, that is 1920×1080 pixels. So the total data for a HD image would be 1920*1080*3 = 6220800 bytes, or a little over 6MB. If we make it into a formula (the only formula in this post, promise), it’ll be:

Size of image = Number of pixels * Bytes per pixel

or

Size of image = Width * Height * Bytes per pixel

That covers on the basic aspect of RGB. It is widely used in digital image processing (basically photoshop). I’m pretty sure you’d have heard about RGB at some point in your life. No? So it’s only me then? Damn.

Now then, onwards to uncharted waters:

YUV

YUV has three channels (like RGB) which are: one luminance (Y) channel and two chrominance (UV) channels. They don’t have abbreviations like RGB (don’t ask me why). While technically it should be Y’UV, we’ll use YUV in this post. While both RGB and YUV represent the colours, the values they have might be different. It’s like the difference between kilometers and miles: although both of them may have different values, they are just representing the same thing, which is distance.

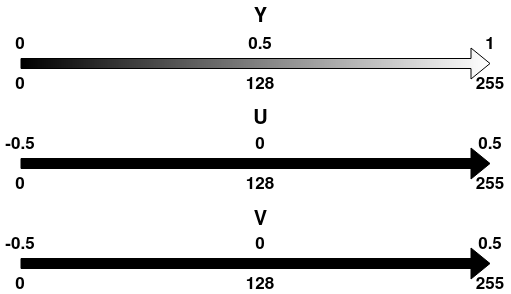

YUV is slightly tricky to understand. Slightly. The value of Y can vary from 0 to 1. with 0 being no brightness and 1 being maximum brightness. But the values for U and V range from -0.5 to +0.5 with 0 being the center.

So for black, since Y denotes the brightness, it’s value will be 0 and the values of U and V will be zero:

Y = 0, U = 0, V = 0 or (Y, U, V) = (0, 0, 0)

For white, the value of Y will be maximum (1) but the values of U and V will be:

Y = 1, U = 0, V = 0 or (Y, U, V) = (1, 0, 0)

Yeah, it is weird. Until you get to know how it works. Let’s take another example. Let’s try red. For red, the values are:

Y = 0.3, U = 0.5, V = -0.17 or (Y, U, V) = (0.3, 0.5, -0.17)

The values are, pretty confusing to understand. Here’s how I made sense of this madness. The U and V channels together specify what colour you want and the Y specifies how bright the colour is going to be. I mean, it pretty much is the definition of YUV (duh), but imagining a slider that varies the brightness of the colour made much more sense to me than just thinking of them as soulless channels. This link does a pretty good job of visualizing the colours in different formats (the one we’re interested are RGB and YPbPr which is same as YUV in our case).

If we are to transform from decimal to integers for use in computers, the Y would be similar to RGB, i.e., [0, 1] is mapped to [0, 255]. But for UV, [-0.5, 0.5] is mapped to [0, 255]. That means a value of 0 corresponds to a value of 128, a value of -0.5 corresponds to 0 and a value of 0.5 corresponds to 255.

Why use YUV if it is so confusing? Because there is one major advantage with YUV. YUV has separate channels for brightness (Y) and colour (UV), which is remarkably similar to our eyes. Human eyes have different cells for perceiving brightness and colour called rods and cones, respectively (high school biology anyone?). And our eyes contain around 120 million rods and 5 million cones. That means, we are more sensitive to changes in brightness than colour. That is why it we can make out shapes of objects but not their colours during night or in dark areas. Using that same principle, we can do some amazing optimizations to how we store the pixel information. It’ll be easier with an example:

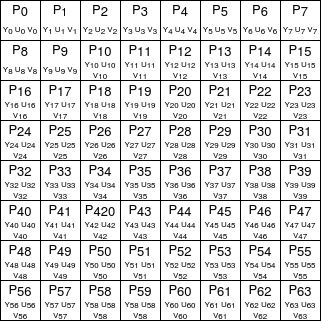

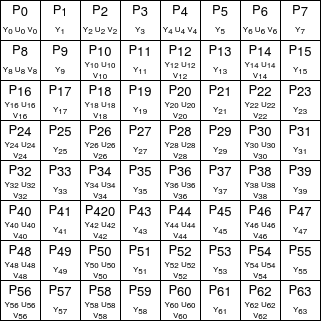

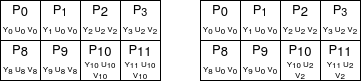

Let’s say I have a YUV image of 8×8 pixels. It will look like this.

Here each pixel is defined by 3 values, Y, U and V. Each pixel is named with a number for easier reference (P0, P1 and so on). So Y0, U0, V0 are the YUV values for P0, and so on. Now, for the magic. I can remove U and V values of alternate pixels and the image will still look the same to us. Mostly same.

This means I don’t have to store every value of U and V. This format is known as YUV 4:2:2 (referred as YUV422). There are multiple ways to reduce the U and V samples, taking alternate values, averaging the two values and so on. We are considering only the alternate values because it’s simpler to understand. This process of reducing the UV samples is called chroma subsampling.

Now for one more magic trick: I can remove U and V values of alternate pixels along the columns it still wouldn’t have a huge difference on how the image looks. This format is called YUV 4:2:0 (YUV420). Pretty neat eh?

This basically means all the U and V values that are present in YUV422 and YUV420 are shared by the neighboring pixels. So in YUV422, U0 and V0 are shared by P0 and P1 and so on. Similarly in YUV420, U0 and V0 are shared by P0, P1, P8 and P9 and so on. This sharing is done as part of displaying and not as part of storing the image.

How much does this save us in memory? The original YUV, with all the U and V values intact, also known as YUV444, is same as that of RGB. So a HD image will cost us 6MB.

YUV422, on the other hand, has 2 Ys, 1U and 1V for every 2 pixels. That means 1Y, 0.5U and 0.5V for one pixel. So if each of them take 1 byte, then in total they’ll take 1 + 0.5 + 0.5 = 2 bytes per pixel. Plugging this value into our size formula, we get 1920*1080*2 = 4147200 bytes or a little over 4MB. This gives us a 33% reduction in size over YUV444.

YUV420, on the other other hand, has 4Ys, 1U and 1V for every 4 pixels. That means 1Y, 0.25U and 0.25V for one pixel. So the size if 1 + 0.25 + 0.25 =1.5 bytes per pixel. For this, we’ll get 1920*1080*1.5 = 3110400 bytes or a little over 3MB. Comparing them, we can see that there is a 50% reduction in size (conversely bandwidth) requirements when using YUV420 over YUV444 (and RGB). The only downside being loss of colours but even that is not very apparent visually.

Initially the TVs supported only black and white videos. So they had two channels of information: one for the video which contained only the brightness information (since black and white meant you only needed the brightness values), and one for audio. When they invented the colour TV later, they had two choices: create a new format for sending colour signals or build upon the existing standards to support colour signals. So they added two more channels for the colours. Those 3 channels (one brightness and two colour channels) are basically the YUV format. Using this format meant the existing infrastructure for video broadcasting wouldn’t have to be drastically changed. Since you’d just be adding some extra channels to handle colour, if it was sent to a black and white TV, the TV would just ignore the colours and show the video in black and white. So with minimal changes (compared to something like RGB) you can show colours in colour TV and black and white in existing TVs. And using YUV420 meant reduction in size by half. Couple this with the fact that bandwidth was ridiculously expensive (due to Jio not being present), the answer was obvious. Go with YUV. And thus, we still use YUV even now. Nearly video you watch uses YUV format. Yeah. It’s that popular.

YUV is not constrained to these two subsampled formats only. There are YUV 4:1:1, UYVY, NV12, NV21 and lots more. But we will stop here to reduce the possibility of a information overload (and also because I am not familiar with most of them).

Also, when I talk about YUV, it is actually YCbCr. YUV is for analog signals, used in older TVs (those heavy boxes, also known as Mjölnir’s older brother). YCbCr is for digital signals. But they are used interchangeably, so most of the time, the difference in names do not matter.

That, hopefully, should help you get started with the YUV (YCbCr) format.

References: